Synthetic Data for Object Detection, Part 3 (AILiveSim)

Published:

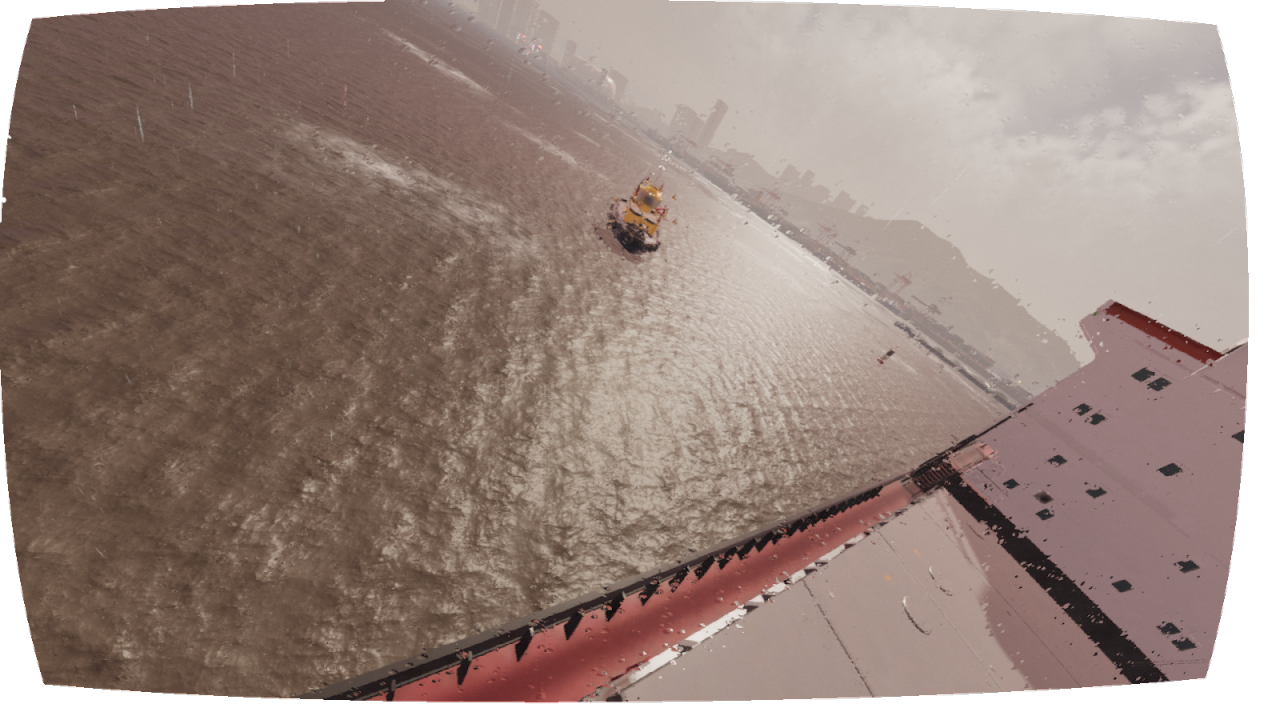

I’ve published Part 3 of the “Synthetic Data for Object Detection” series via AILiveSim—this time focusing on a head-to-head comparison of models trained on real vs synthetic data and their ability to generalize to a third-party benchmark.

In this part, I cover:

A comparative analysis of YOLOv5 trained on AILiveSim synthetic data vs. a model trained on real data (Roboflow).

A two-phase protocol: matched training setups, then testing on the Singapore Maritime Dataset (SMD) for an unbiased evaluation.

Dataset contrasts: higher-res, multi-object, weather/sea-state diversity in synthetic data vs. smaller, single-object, lower-variety real data.

Results: the synthetic-trained model shows stronger generalization to SMD, highlighting the value of controllable, fit-for-purpose synthetic data.

Reflections on data curation, domain alignment, and why control over training data can matter more than “realness” alone.

Read the full article on LinkedIn: Synthetic Data for Object Detection, Part 3 (AILiveSim)